Responsible AI: Important for Data-driven Fintechs

- Ashutosh Dubey, Senior Lead Business Analytics, Market Innovation

Over the past decade, artificial intelligence (AI) systems have gained traction because of their potential to unlock economic value and help mitigate social challenges. In recent years, the development and adoption of AI have both experienced a surge. AI is projected to add USD 957 billion, or 15 percent of India's current gross value added, to its economy by 2035. According to industry projections, the AI software market will grow from USD 10.1 billion in 2018 to USD 126 billion by 2025. Technology's strong value proposition may also contribute to the rapid increase in adoption

Niti Aayog released a report on Responsible AI last year. The report detailed The National Strategy for Artificial Intelligence (NSAI), a strategy that has effectively brought AI to the forefront of the Government's reform agenda by highlighting its potential to improve outcomes in sectors such as healthcare, agriculture, and education. It implies a whole new path for government interventions in these sectors when AI facilitates better scales of delivery of specialised services (i.e., remote diagnostics or precision agriculture advisory) and improved access to government welfare services. Furthermore, the NSAI emphasises upon the need for a robust ecosystem that facilitates cutting-edge research to not only solve these societal problems and serve as a platform for AI innovations but also enables India to become a strategic global leader by scaling these solutions globally. Fintech companies are also relying on AI to offer their services. Buy Now, Pay Later is the best example of this. Even customised offerings to end customers will be prompted by AI systems within Fintech firms.

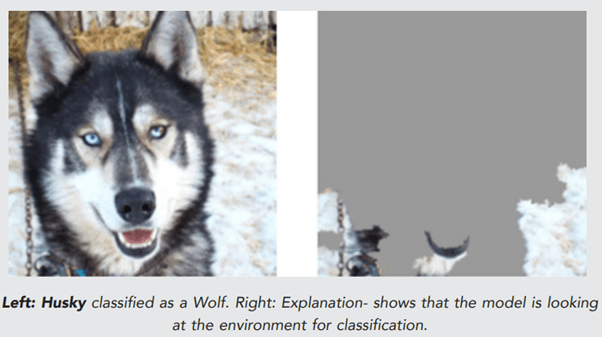

Famous Image Classification problem

A Wolf or Husky

Using an image classification algorithm, Ribeiro et al. (2016) classified an animal image as either a wolf or a husky in accordance with its prescribed task. In analysing the model, it was found that the animals were not being classified by the model, but by the background. On the data that was used to test the model, it did reasonably well, but might not perform as well in reality. An ethical and legal framework for addressing artificial intelligence (AI) challenges is called Responsible AI. Responsible AI initiatives are driven by the desire to resolve ambiguity about who is responsible if something goes wrong.

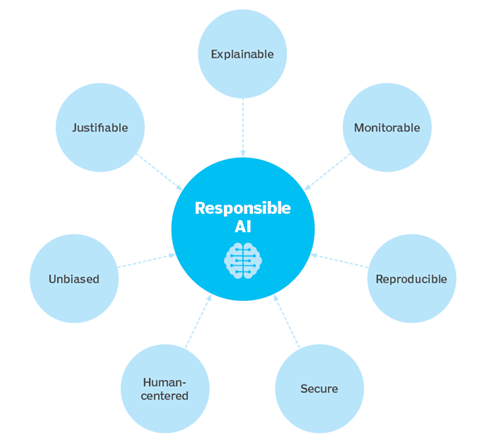

Fig: Components of Responsible AI

Source: TechTarget

AI and machine learning algorithms are infiltrating society at an accelerated rate, and stakeholders are increasingly asking for explanations of their outputs. These stakeholders, including regulators, domain experts, and system developers, all present different explanation needs. Researchers offer AI Explainability 360 (AIX360), an open-source software toolkit that provides eight state-of-the-art Explainability methods, as well as two evaluation metrics. A taxonomy is also provided so that entities seeking explanations can navigate the space of explanation methods, not just those in the toolkit, but also those in the broader literature on explainability. Users of the toolkit have access to AIX360's extensible software architecture, which organises methods according to their position within the AI modeling pipeline. Last but not least, they had implemented enhancements to make AI more explainable for different audiences and applications. These included simplified, more accessible algorithms, tutorials, and a web demo to demonstrate AI to a variety of audiences. As more explainability methods are developed, their toolkit and taxonomy can serve as a platform for identifying gaps and providing a platform for incorporating such gaps.

Why AI is important for Fintechs?

AI is among the most powerful innovations in today's market. Organising billions of data points, creating personalised experiences, anticipating needs, and managing cybersecurity and fraud risks are all possible with this technology. Financial services should consider and analyse the risks and barriers - big or small - to the adoption of this new technology as it embraces the potential.

In order to ensure ethical decision making and data privacy in financial services, European authorities have created standards regarding personal data handling and model training. Regulations such as GDPR give individuals greater control over their data, even as they pose a number of challenges for the industry when developing AI solutions, like obtaining consent for AI experiments and deleting or anonymizing all personal data that is not necessary.

AI regulatory approaches are entering a new phase in the U.S. and internationally. NIST, at the Department of Commerce, has proposed a federal AI engagement plan inviting federal agencies to work on a range of AI standards, including some that can form the basis for a regulatory framework.

Data quality and reliability are the responsibility of financial services providers. They should be aware of the implications and impacts of their technology. It is a real danger for AI models to go wrong given the complexity and scope of the tasks it is generally assigned. AI without proper guidance and training can output biased responses, for instance, with potentially damaging consequences. Good governance isn't just a risk management exercise - when done right and communicated clearly, it can drive business and loyalty. According to a study by Capgemini, 62% of consumers are more likely to trust a company whose AI is understood to be ethical, while 61% are more likely to refer that company to friends and family and 59% are more loyal to it.

Good governance and effective controls will become increasingly important as AI applications become more powerful and widespread. By keeping informed of how these models work, what they contribute to decision-making processes, what risks they entail, and the potential impact of any error in the system, we can bring AI-driven Fintech applications to the table without compromising our other responsibilities.

References

Niti Aayog Report: https://www.niti.gov.in/sites/default/files/2021-02/Responsible-AI-22022021.pdf

Wolf vs Husky Example: https://arxiv.org/abs/1602.04938

AIX360 toolkit: https://aix360.mybluemix.net/

Best Practices for Responsible AI : https://www.techtarget.com/searchenterpriseai/definition/responsible-AI

Comparision of AI explainability toolkits: https://arxiv.org/abs/1909.03012